An Overview on Logistic Regression in ML

Introduction:

This is a supervised ML algorithm and also known to solve the binary classification problems. Binary means two and classification means natures and this means that we use it, to predict the nature between two natures. In simple words here we have two classes it can be true(presence) or false(absence) or may be 1 or 0, Like Linear Regression problem which we discussed in blog 4, we give and input x and model (discuss it later) will predict output y. In of the point is false categories called negative class and true categories called positive class.

How to achieve Logistic Regression:

To Achieve logistic regression we use sigmoid function which gives us the value of 0 and 1. We apply condition here to get the prediction in 0 and 1 form like if f(x) which is output greater than 0.5 then real output will be 1 and if less than 0.5 then real output will be 0. This we cannot achieve if we start using the linear function for this kind of problem also like linear regression problems because linear function can give us the output in wide variety of numbers which can make difficult to get classification number like 0 and 1.

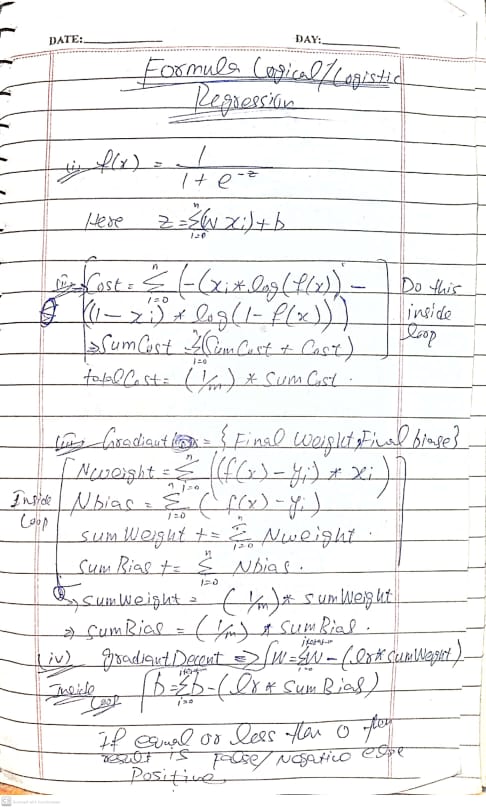

Sigmoid Formula:

An overview of Logistic Regression with single neuron:

As you can see x is input which will multiply by weight(w) and add by bias(b) and sigma is activation

function sigmoid after applying sigmoid we will get the output y. Here after getting the output y we will compare thus output with the actual output if both are same or close then good we test this thing using the loss function if loss is less then good otherwise we will change the value of weights and bias using gradient decent. This we will do until we reach the closest value.

Loss Function Formula:

L(f w[ i ] ,b ) ,y1 ) =- ( yi ) log ( fwb ( x[i] ) ) - ( 1 - yi ) log ( 1 - fw[i] , b ( x[i] ) )

Cost Function Formula (Gradient Decent Formula):

J ( w[i] , b ) = 1 / m ( sigma ( L ( fw [i] , b ( x[i] , yi ) ) )

Notes:

Code:

from distutils.log import warn

import numpy as np

import matplotlib.pyplot as py

x_train=np.array([2,3,5,6,9,1])

y_train=np.array([0,0,1,1,1,0])

np.seterr(divide = 'ignore')

# np.seterr(divide='warn')

def calculate(x,w,b):

fx=np.dot(x,w)+b

return fx

def sigmoid(z):

fx=1/(1+2.71**(-z))

return fx

def cost(x,y,w,b):

m=len(x)

t_cost=0

sum_cost=0

for i in range(m):

fx=sigmoid(np.dot(x[i],w)+b)

print("here is the issue ",fx)

t_cost=-(x[i]*np.log(fx))-((1-x[i])*np.log(1-fx))

sum_cost+=t_cost

total_cost=(1/m)*sum_cost

return total_cost

def gradientLoss(x,y,w,b):

m=len(x)

lossW=0

lossB=0

sum_loss_w=0

sum_loss_b=0

historyW=np.zeros(m)

historyB=np.zeros(m)

for i in range(m):

fx=sigmoid(np.dot(x[i],w)+b)

loss=fx-y[i]

lossW=loss*x[i]

lossB=loss

historyW[i]=lossW

historyB[i]=lossB

sum_loss_w+=lossW

sum_loss_b+=lossB

sum_loss_w=(1/m)*sum_loss_w

sum_loss_b=(1/m)*sum_loss_b

return sum_loss_w,sum_loss_b

def gradient(x,y,w,b,lr,iterations):

historyW=np.zeros(iterations)

historyB=np.zeros(iterations)

for i in range(iterations):

gradientW,gradientB=gradientLoss(x,y,w,b)

w=w-(lr*(gradientW))

b=b-(lr*(gradientB))

historyW[i]=w

historyB[i]=b

return historyW,historyB

def predict(x,w,b):

result=np.zeros(len(x))

for i in range(len(x)):

output=calculate(x_train[i],w,b)

if(output>0):

result[i]=1

else:

result[1]=0

return result

w=0.1

b=1

lr=0.1

historyW,historyB=gradient(x_train,y_train,w,b,lr,1000)

print("Weights are ",historyW)

print("Bias are ",historyB)

w=historyW[-1]

b=historyB[-1]

# total_cost=cost(x_train,y_train,w,b)

# print("Cost is ",float(total_cost))

output=predict(x_train,w,b)

print("Output is ",output)

output2=sigmoid(np.dot(x_train,w+b))

print("Sigmoid output is ",output2)

py.scatter(output,output2,c='r')

py.title("Comparison")

py.xlabel("predict")

py.ylabel("sigmoid")

py.show()

0 Comments:

Post a Comment